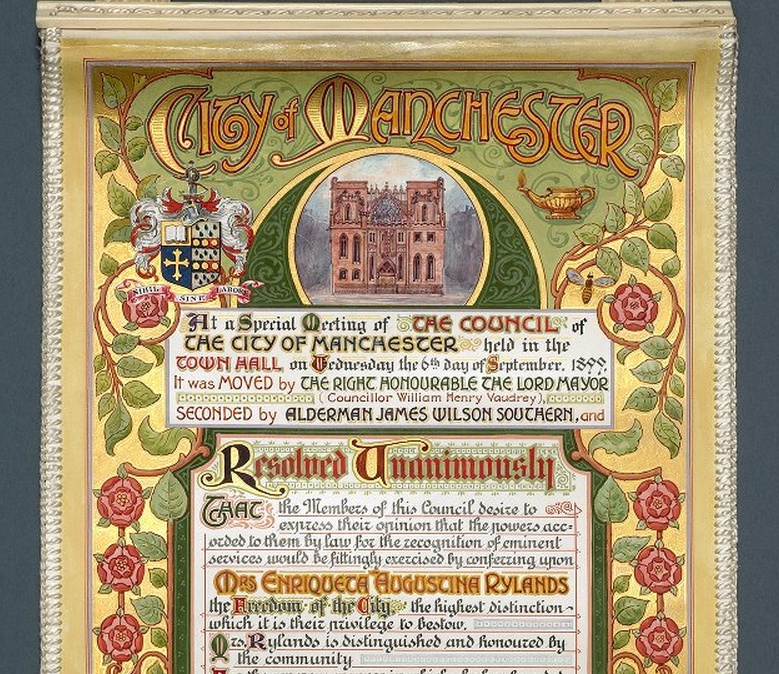

A couple of days ago I visited the beautiful John Rylands Library in Manchester with the family. Within the library is a document recording the honour of “Freedom of the City of Manchester” awarded to Enriqueta Augustina Rylands, third wife of John Rylands, when she founded the library in 1899.

Aside from the beauty and the colourful vibrancy of this document, what struck me was the verbosity and sheer length of the sentences contained within. Here’s a key sub-sentence from the document which is 39 words long and drawn from a parent sentence no less than 73 words long:

“…the members of this council desire to express their opinion that the powers accorded to them by law for the recognition of eminent services would be fittingly exercised by conferring upon Mrs Enriqueta Rylands the Freedom of the City…”

So how do we break down a relatively complex sentence such as this in order to analyse it? The answer is to build a syntax tree, a representation of the sentence decomposed into its constituent sub-sentences, decomposed in turn into noun phrases and verb phrases, decomposed in turn into nouns, verbs and other parts of speech. This is a three-step process:

- Tokenising – splitting the sentence into its constituent entities (mainly words).

- Part of speech tagging – assigning a part of speech to each word.

- Parsing – turning the tagged text into a syntax tree.

I’ll be using nltk to help me. Here goes…

1. Tokenize

Splitting a sentence into words seems like it should be an easy task but the main gotcha is deciding what to do with punctuation such as full stops and apostrophes. Thankfully, nltk just “does the right thing” (or at least it does the same thing predictably and consistently). In our case, there’s no punctuation to worry about so we could just split the sentence on whitespace, but we’ll use the nltk anyway as good practice.

>>> import nltk

>>> sent = 'the members of this council desire to express '

'their opinion that the powers accorded to them by law '

'for the recognition of eminent services would be '

'fittingly exercised by conferring upon Mrs Enriqueta '

'Rylands the Freedom of the City'

>>> tokens = nltk.word_tokenize(sent)

>>> print tokens

['the', 'members', 'of', 'this', 'council', 'desire', 'to', 'express', 'their', 'opinion',

'that', 'the', 'powers', 'accorded', 'to', 'them', 'by', 'law', 'for', 'the', 'recognition',

'of', 'eminent', 'services', 'would', 'be', 'fittingly', 'exercised', 'by', 'conferring',

'upon', 'Mrs', 'Enriqueta', 'Rylands', 'the', 'Freedom', 'of', 'the', 'City']

2. Tag

Part of speech tagging is also catered for by the nltk. The built in tagger uses a maximum entropy classifier and assigns tags from the Penn Treebank Project. A list of tags and guidelines for assigning tags can be found in this document.

>>> nltk.pos_tag(tokens)

[('the', 'DT'), ('members', 'NNS'), ('of', 'IN'), ('this', 'DT'), ('council', 'NN'),

('desire', 'NN'), ('to', 'TO'), ('express', 'NN'), ('their', 'PRP$'), ('opinion', 'NN'),

('that', 'WDT'), ('the', 'DT'), ('powers', 'NNS'), ('accorded', 'VBD'), ('to', 'TO'),

('them', 'PRP'), ('by', 'IN'), ('law', 'NN'), ('for', 'IN'), ('the', 'DT'),

('recognition', 'NN'), ('of', 'IN'), ('eminent', 'NN'), ('services', 'NNS'), ('would', 'MD'),

('be', 'VB'), ('fittingly', 'RB'), ('exercised', 'VBN'), ('by', 'IN'), ('conferring', 'NN'),

('upon', 'IN'), ('Mrs', 'NNP'), ('Enriqueta', 'NNP'), ('Rylands', 'NNPS'), ('the', 'DT'),

('Freedom', 'NNP'), ('of', 'IN'), ('the', 'DT'), ('City', 'NNP')]

As expected, some tagging decisions are questionable and some are just plain wrong. The most common errors tend to be with words which can be used as both nouns and verbs, for example, desire and express. These are incorrectly tagged as nouns rather than verbs as a “best guess” as there are far more nouns than verbs in the English language. By my reckoning, we’ve achieved about 85% accuracy in this sentence with just six manual corrections required:

('desire', 'NN') -> ('desire', 'VB')

('express', 'NN') -> ('express', 'VB')

('that', 'WDT') -> ('that', 'IN')

('accorded', 'VBG') -> ('accorded', 'VBN')

('eminent', 'NN') -> ('eminent', 'JJ')

('conferring', 'NN') -> ('conferring', 'VBG')

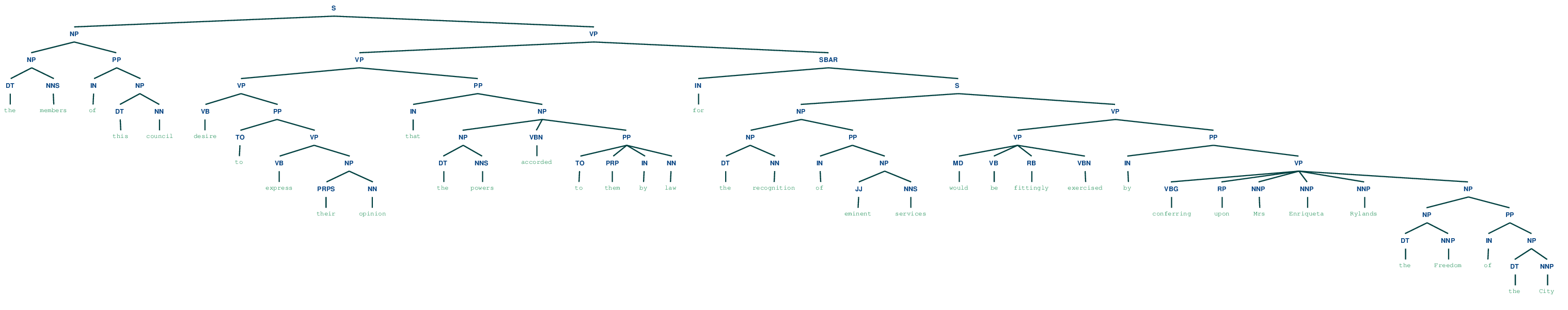

3. Parse

Now the hard part. Analysing sentence structure tends to be a manually intensive process. I’ll start by hand crafting a context free grammar by gradually splitting the sentence into its constituent parts in multiple iterations, for example:

Iteration 1

S = Sentence

NP = Noun Phrase

VP = Verb Phrase

SBAR = Subordinating Clause

IN = Preposition or subordination conjunction.

(S the members of this council desire to express their opinion that the

powers accorded to them by law for the recognition of eminent services

would be fittingly exercised by conferring upon Mrs Enriqueta Rylands

the Freedom of the City)

Iteration 2

(S

(NP the members of this council)

(VP desire to express their opinion that the powers accorded to them by

law for the recognition of eminent services would be fittingly exercised

by conferring upon Mrs Enriqueta Rylands the Freedom of the City))

Iteration 3

(S

(NP the members of this council)

(VP

(VP desire to express their opinion)

(SBAR

(IN that)

(S the powers accorded to them by law for the recognition of eminent

services would be fittingly exercised by conferring upon Mrs Enriqueta

Rylands the Freedom of the City))))

etc…

By repeating this process, the following grammar is produced, shown here together with an application to display the generated syntax tree.

import nltk

sent = 'the members of this council desire to express '

'their opinion that the powers accorded to them by law '

'for the recognition of eminent services would be '

'fittingly exercised by conferring upon Mrs Enriqueta '

'Rylands the Freedom of the City'

tokens = nltk.word_tokenize(sent)

grammar = """

S -> NP VP

NP -> NP PP | DT NNS | DT NN | PRPS NN | NP IN NP | NP VBN PP | JJ NNS | DT NNP

PP -> IN NP | TO VP | TO PRP IN NN | IN VP

SBAR -> IN S

VP -> VP SBAR | VB PP | VB NP | VP NP | VP PP | MD VB RB VBN | VBG RP NNP NNP NNP NP

DT -> 'the' | 'this'

NNS -> 'members' | 'powers' | 'services'

IN -> 'of' | 'that' | 'by' | 'for'

NN -> 'council' | 'opinion' | 'law' | 'recognition'

VB -> 'desire' | 'express' | 'be'

TO -> 'to'

PRPS -> 'their'

VBN -> 'accorded' | 'exercised'

PRP -> 'them'

JJ -> 'eminent'

MD -> 'would'

RB -> 'fittingly'

VBG -> 'conferring'

RP -> 'upon'

NNP -> 'Mrs' | 'Enriqueta' | 'Rylands' | 'Freedom' | 'City'

"""

parser = nltk.ChartParser(nltk.parse_cfg(grammar))

trees = parser.nbest_parse(tokens)

trees[0].draw()

This grammar results in no less than 1956 different possible syntax trees for this sentence (in theory meaning that this sentence could be interpreted in up to 1956 different ways).

The first of these syntax trees has a maximum depth of 11. Contrast this with a sentence such as “the cat sat on the mat” with a maximum depth of approximately 5. The depth of the syntax tree gives a feel for the complexity of the sentence and the depth of sub-sentences, sub-clauses and dependent phrases within the sentence.

Now when it comes to considering how the human brain might parse and understand this sentence, it might be interesting to consider whether the depth of the syntax tree can be thought of similarly to the stack depth in a running application. Does the human brain contain a stack for parking sentence fragments as a complex sentence unfolds? Is there a maximum stack depth, and if so, does this vary greatly from person to person?

Complex sentences certainly require more concentration to understand and perhaps the phrase: “Could you repeat that, please!” is the direct result of a cerebral stack overflow error!